What & Why MBSE…

To summarize from our last blog on “what is systems engineering?”:

You’re developing complex multi-domain products using classic practices while living inside silo-based organizations which produce matching siloed products that are difficult to integrate. Grasping at straws we begin automating the silos with siloed model-based systems engineering (MBSE) tools.

INCOSE defines MBSE as:

… is the formalized application of modeling to support system requirements, design, analysis, verification and validation activities beginning in the conceptual design phase and continuing throughout development and later life cycle phases (INCOSE Systems Engineering Handbook, INCOSE Vision)

…or in my own layman’s terms, applying models (of all kinds) to systems engineering.

Why MBSE?

Remember we need to apply models to the practice of systems engineering because our legacy text-based practices don’t scale to the current levels of product complexity (millions of lines of code, hundreds of ECU’s, thousands of changes, working inside a complex regulatory environment, etc.). So, we naturally attempt to implement aspects of systems engineering such as requirements, system modeling, performance modeling, and reliability modeling to answer the pressing questions like, “Does the product do what it is required to do, in the time it’s supposed to do it, in a reliable way, within our cost targets?” (i.e. automation in silos).

After graduating from engineering school, I went to work in a typical high-tech siloed organization. I was assigned to a tiger team that got called up when something went wrong on the production lines. Working alongside some very experienced teammates we noticed that some of these problems we were working looked familiar and often found we were dealing with the same problems over and over again that were caused by other silos in the organization (i.e. purchasing, legal, IT, or manufacturing). We wondered, “How long does a problem once solved take to come back?” In high tech it was ~6 months to a year that mysteriously matched the typical project cadence. We checked other industries, like automotive (~3 years) or aerospace (~15 years) and found the problem-resurface metric matched project cycles. What’s going on? The experience with the problem leaves the program when the program ends. The next project working on a similar technology doesn’t have the benefit of the lessons learned and repeats/rediscovers the problem. (See previous blog on this topic “Integrated MBE Soutions: preventing insane product development”).

Walking in circles…

Given the tools are living in silos, they’re not helping to prevent these problems from coming back, thus we have the tendency to repeat the same problems over and over resulting in problem deja vu. We know we’ve seen it before and we know we are in trouble/lost because we see the same landmarks going by making us wonder if we are walking in circles.

Interestingly, the Max Planck Institute for Biological Cybernetics did a study to see if lost people really do walk in circles (see study documented in Current Biology Sept. 29, 2009) by putting GPS trackers on volunteers and asking them to walk in a straight line. These resulting graphs document the results in different types of terrain.

It turns out people DO walk in circles. Why? Because they are “increasingly uncertain about where straight ahead is.”

They re-tried the test again with blindfolds, (3rd, white graph below) and the circles got tighter (as small as 20 meters). Their bottom line conclusion: “We walk in circles when we are missing guidance cues.”

Applied to our MBSE topic, the purpose of MBSE models of all kinds (cost, system models, reliability, requirement tracing, etc.) is to provide cues on where straight ahead is so we can achieve the shortest distance between two points–program start and product delivery.

You’re not going to solve this “walking in circles” problem by only automating the silos. There are many organizations with

large, trust-worthy requirement repositories, test procedure baselines, standard parameter libraries, and other silo-based repositories. However, since those cues are locked up in their siloed tools, they can’t offer us any cues on where we are so we can follow a straight path. This gives us a siloed/blinder-type vision where we forget why we are gathering requirements, standard parameters, etc. thinking the value is in the gathering. The value of requirements, controlled test cases, or standard parameters isn’t in managing them, it’s in knowing where they go, where they apply, this version(s) of requirement tied to this version of this part, verified by these test cases, executed on these test sets, calibrated on this date, gives us the cues that enable us to keep the project on a straight line as things inevitably change.

“Spec-Design-Build” vs “Integrate-THEN-build” development…

While I was working in the high tech electronics industry, the regulatory people told me that they were dealing with ~1200 regulatory changes per month. You can imagine the amount of time consumed by understanding where those changes went to bring the organization into compliance. This aligns with studies into systems engineering effort that claims half their time will be spent managing change, finding the right information, etc. Of course while you are looking for impacts, everyone else is moving on without you and you have an escape in the making.

To keep up, you need to think continuously. This will require continuous updates, analogous to what GPS guidance systems do for aircraft, ships, and vehicles. Instead of taking periodic sightings during critical design reviews we must be continuously adjusting our course per the latest cues to stay straight ahead (this also helps us to quickly react to customer course changes).

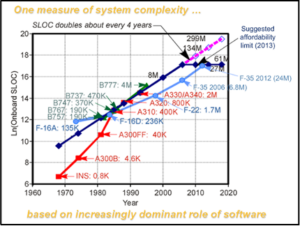

The aerospace industry has recognized this affordability problem and funded the Aerospace Vehicle Systems Institute (AVSI.aero based at Texas A&M University) to help “achieve quality at an affordable price.” They have a number of projects including gathering various aircraft complexity measurements.

Here’s a graph describing the effect of software on the problem:

This is what Norm Augustine (legendary LMCO CEO) postulated back in the ‘70s, the data shows we’ve reached the point we cannot afford to make aircraft. They state we no longer afford the “spec-design-build” practice. We need to switch to “Integrate-THEN-Build” practice or as they put it “start integrated, stay integrated”.

This switch to continuous integration will require not just managing systems engineering data but relating it to other things so you can see where it goes now vs later (i.e. straight line). It needs to move fast because, everyone else on the program is moving on with or without you (they are starting to wander already).

This means that systems engineering artifacts, relationships, etc. need to participate in the product baseline so those requirements or parameters can be tied into the program management systems, so when things change you can see the cues, where they go now vs later. So, when a customer changes a requirement, you can see what version of what parts are affected, what tasks are affected in the program schedule, what resources are affected, what test cases need to be re-executed, what test equipment is required, what manufacturing processes are effected, etc. That’s going to demand more than just managing requirements, test procedures, and product data, it’s going to require managing the relationships, what Dr. Martin Eigner refers to as System Lifecycle Management (SysLM).

The integration of systems engineering, supported by MBSE integrated with the PLM to achieve SysLM enables continuous integration. This is not just isolated MBSE tools providing their perspective on the problem, but collaboratively contributing to continuous understanding of where straight ahead is enabling us to stop walking in circles and handle the complexity of today’s products.

So where are we in achieving SysLM and how do Siemens solutions support this continuous integration approach? How do you get started inside your organization? This will be covered in our next installment…

Please join me for the continued conversation.

Mark Sampson

Systems Engineering Evangelist